Adversarial Users#

Of all external validity concerns that data scientists tend to underappreciate, none is more likely to cause serious problems than the existence of adversarial users.

Adversarial users are users who attempt to subvert the intended function of a statistical or machine learning model. At first blush, adversarial users seem the stuff of spy novels and international espionage, and indeed the term encompasses people trying to deliberately cheat a system by nefarious means (e.g., the 2024 TikTok “Infinite Money Glitch” in which people deposited fake checks and then immediately withdrew money before the check was invalidated). But it also covers situations in which people use a system in a manner that is entirely within its rules, but do so in a way that the system designers did not foresee, resulting in outcomes the system designers do not desire. Indeed, adversarial users are likely to exist any time an algorithm or model is used to make decisions that are important to people.

The pervasive threat of adversarial users emerges because humans are strategic actors. Once people realize that the outcomes they care about — insurance approvals, promotions, hiring, etc. — are being influenced by a formula, they will change their behavior to respond to the incentives created by the system.

Adversarial Users as External Validity Concern#

Adversarial users emerge because as soon as a model is deployed to make decisions, that deployment itself constitutes a change in how the world operates. We train our models using historical data in which people are not attempting to adapt their behavior to accommodate the model we are training. But as soon as we deploy our model, people’s behavior will begin to change in response to the deployment, immediately threatening the validity of our model.

To the best of my knowledge, the term “adversarial users” is only used by computer scientists, but the concept that using any type of formula for evaluation or decision-making will immediately change how people behave (and thus the validity of the formula) has a long and storied history as immortalized by some famous “laws:”

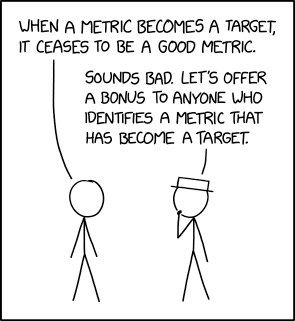

“When a measure becomes a target, it ceases to be a good measure.”

“The more any quantitative social indicator is used for social decision-making, the more subject it will be to corruption pressures and the more apt it will be to distort and corrupt the social processes it is intended to monitor”

“Given that the structure of any [statistical] model consists of optimal decision rules of economic agents, and that optimal decision rules vary systematically with changes in the structure of series relevant to the decision maker, it follows that any change in policy will systematically alter the structure of [statistical] models.”

And since no idea is serious until it’s been immortalized in an XKCD comic:

Robograders: A Close To Home Example#

To illustrate what “adversarial users” look like in what may feel like a familiar context, consider the Essay RoboGrader. Training an algorithm to answer the question “If a human English professor read this essay, what score would they give it?” is relatively straightforward — get a bunch of essays, give them to some English professors, then fit a supervised machine learning algorithm to that training data. What could go wrong?

The problem with this strategy is that the training data was generated by humans who knew they were writing essays for humans to read. As a result, they wrote good essays. The machine learning algorithm then looked for correlations between human essay rankings and features of the essays, and as a result, it could easily predict essay scores, at least on a coarse scale.

But what happens when humans realize they aren’t being graded by humans? Well, now, instead of writing for a human, they will write for the algorithm. They figure out what the algorithm rewards — big, polysyllabic words (don’t worry, doesn’t matter if they’re used correctly), long sentences, and connecting phrases like “however” — and stuff them into their essays.[1]

This works because the essay writers who used polysyllabic words and long sentences in the training data happened to also be the students who were writing good essays. These were reliable predictors of scores in essays people wrote for humans. But they aren’t a reliable predictor of essay quality in a world where students know the essays aren’t being written for humans, just machines.

Another way of thinking about this is that we’re back to the issue of alignment problems: they want the algorithm to reward good writing, but that’s not actually what they trained it to do. In this case, however, the alignment problem is rearing its head because people are actively trying to exploit this difference.